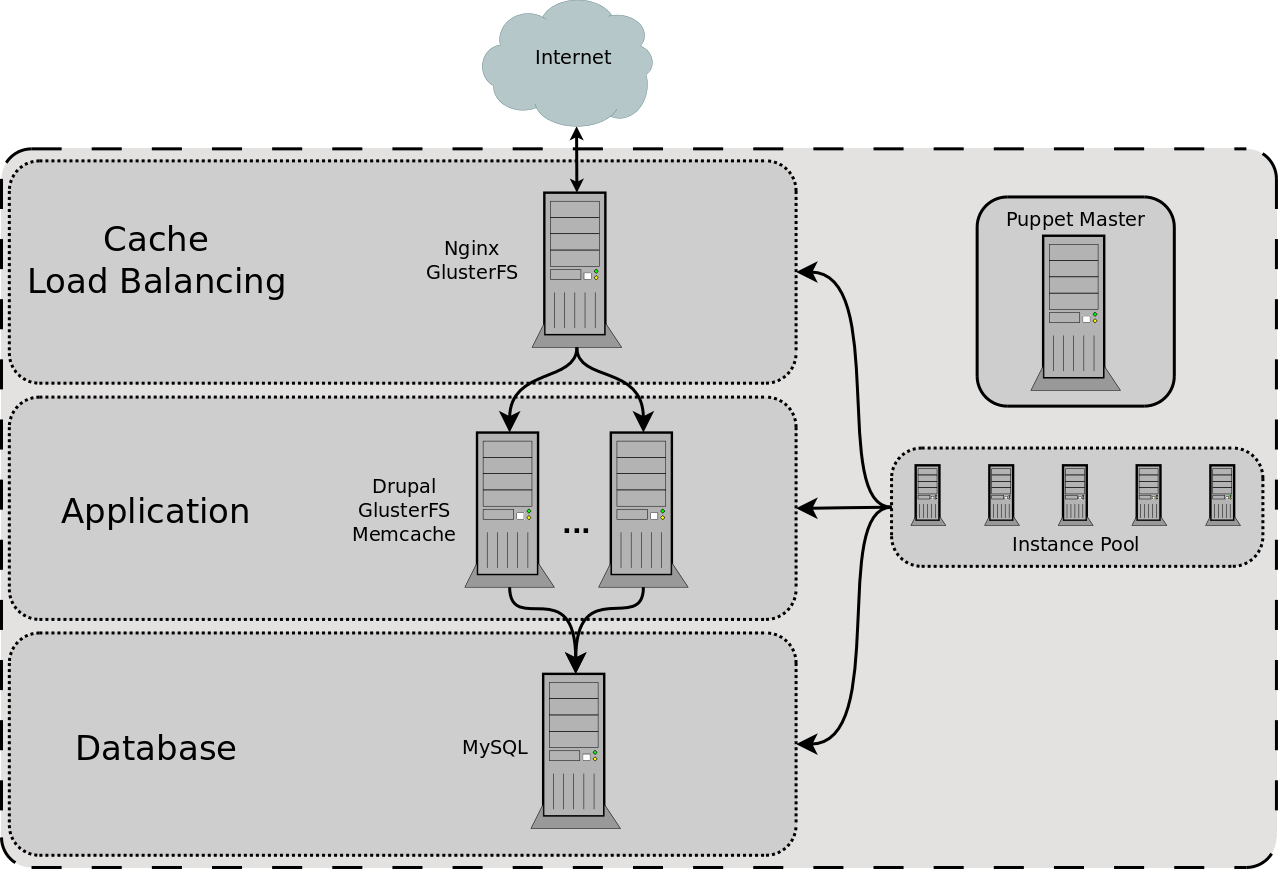

Lately, we have been involved in a project where our clients needed a site capable of serving a large number of anonymous users and a reasonable number of concurrently logged in users. In order to reach these goals, we looked to the cloud. We first got as much caching as possible, since this is relatively simple and goes a long way. We next created a distributed system. This blogs describes how we got it to work. A diagram of our architecture is attached, and the various configurations are summarized at the bottom.

First, the anonymous user caching. Anonymous users all view the same content, so if we cache a static html page, we can serve this page without involving php at all. We are using boost to provide these static pages. And then we have nginx serving these cached pages and acting proxying other requests to Apache. Since nginx can scale without much of a memory hit, it is much better to use nginx to serve large amounts of static files and let apache handle the logged in users and new page requests. Now, for anonymous users, the bottleneck suddenly becomes the network, and on a localhost test, ab records well over 10 thousand hits per second being served by a 2gb rackspace instance.

On to logged in caching. We use APC as an opcode cache. This saves the server from recompiling the php code on every page load. Moreover, the whole thing fits easily in RAM (we typically give APC 128M of ram). This drastically decreases the CPU usage. Logged in users can now browse the site much faster. But we can still only handle a limited number of them. We can do a bit better. Instead of querying MySQL every time we go to the cache, we can store these tables in memory. Here come memcached and the cacherouter module.

Now, if you've looked at the nginx conf bellow, you might have noticed that it is also acting as a load balancer. We have Drupal on multiple nodes. The first step in achieving this was putting MySQL on a different node (this does require hardening it up) and having apache live on different machine. However, in order to make sure that user uploaded files and "boosted" cache files are available on all apache servers, we use glusterfs to replicate files accross all machines. We also use glusterfs to replicate the code base so that changes can be made quickly, although we rsync it to the file system since it slows down file operations. The PHP code is not being run from glusterfs.

Putting it all together: the architecture. You can find the attached diagram with the architecture. We are deploying all our servers on rackspace hosting, starting with an Ubuntu Karmic image. There are three types of nodes: load balancers and static file servers which we'll refer to as nginx nodes, server nodes with apache which we'll refer to as apache nodes, and the database node(s) which we'll refer to as mysql nodes.

The nginx nodes have nginx, memcached and glusterfs installed. They serve static files from a shared folder on a glusterfs mount. Any request which is not cached and is not found in the static files will be proxied to the pool of apache nodes. The memcached deamon is part of a pool in which the apache nodes also participate, and which is used by cacherouter to distribute mysql cached queries and the cache tables. The nginx nodes can be replicated for high availability, since the files they are serving are replicated in real time via glusterfs.

The apache nodes have apache with mod_php and php 5.2 installed, as well as glusterfs, apc and memcached. We can spin up new instances quickly and add them to the pool, as once glusterfs is mounted, it will quickly sync up the files from the other nodes as necessary, and be available to receive it's share of requests. All the Drupal nodes talk to the MySQL node for the database. The MySQL node can also be replicated for high availability.

Deploying rapidly: what is the point of having a distributed architecture in the cloud if we cannot scale quickly? We use puppet to quickly configure a node which has been spun up to the nginx or apache pools.

Wrapping it up: we should be able to follow up soon with a post on performance. Testing we have done so far indicates that the system does scale up quite well. We have also compared rackspace hosting to ec2, and the numbers show that rackspace is much faster for drupal, mostly due to the network latency. We will soon have numbers and graphs to show it all.

Configuring apc: we set the memory size to 128M with a single bin.

Configuring cacherouter: version: 6.x.1.x-dev (vs 6.x.1.0-rc1)

* The dev version had some bug fixes for the memcached engine at the time we installed it

Append following to your Drupal's settings.php

# Cacherouter

$conf['cache_inc'] = './sites/all/modules/cacherouter/cacherouter.inc';

$conf['cacherouter'] = array(

'default' => array(

'engine' => 'memcached',

'servers' => array(

'web01',

'web02',

'web03',

),

'shared' => TRUE,

'prefix' => '',

'path' => '',

'static' => FALSE,

'fast_cache' => FALSE,

),

);Configuring boost: most of boost's default settings are fine. We turned on gzip and enabled css and js caching. We also ignore the htaccess rules, since we use nginx to serve the html files.

Configuring nginx (version 7.62):

in nginx.conf in the "http" section:

upstream apaches {

#ip_hash;

server web01;

server web02;

server web03;

}in the host conf, in the "server" section:

server {

listen 80;

proxy_set_header Host $http_host;

gzip on;

gzip_static on;

gzip_proxied any;

gzip_types text/plain text/html text/css application/json application/x-javascript text/xml application/xml application/xml+rss text/javascript;

set $myroot /var/www;

#charset koi8-r;

# deny access to files beginning with a dot (.htaccess, .git, ...)

location ~ ^\. {

deny all;

}

location ~ \.(engine|inc|info|install|module|profile|po|sh|.*sql|theme|tpl(\.php)?|xtmpl)$|^(code-style\.pl|Entries.*|Repository|Root|Tag|Template)$ {

deny all;

}

set $boost "";

set $boost_query "_";

if ( $request_method = GET ) {

set $boost G;

}

if ($http_cookie !~ "DRUPAL_UID") {

set $boost "${boost}D";

}

if ($query_string = "") {

set $boost "${boost}Q";

}

if ( -f $myroot/cache/normal/$http_host$request_uri$boost_query$query_string.html ) {

set $boost "${boost}F";

}

if ($boost = GDQF){

rewrite ^.*$ /cache/normal/$http_host/$request_uri$boost_query$query_string.html break;

}

if ( -f $myroot/cache/perm/$http_host$request_uri$boost_query$query_string.css ) {

set $boost "${boost}F";

}

if ($boost = GDQF){

rewrite ^.*$ /cache/perm/$http_host/$request_uri$boost_query$query_string.css break;

}

if ( -f $myroot/cache/perm/$http_host$request_uri$boost_query$query_string.js ) {

set $boost "${boost}F";

}

if ($boost = GDQF){

rewrite ^.*$ /cache/perm/$http_host/$request_uri$boost_query$query_string.js break;

}

location ~* \.(txt|jpg|jpeg|css|js|gif|png|bmp|flv|pdf|ps|doc|mp3|wmv|wma|wav|ogg|mpg|mpeg|mpg4|htm|zip|bz2|rar|xls|docx|avi|djvu|mp4|rtf|ico)$ {

root $myroot;

expires max;

add_header Vary Accept-Encoding;

if (-f $request_filename) {

break;

}

if (!-f $request_filename) {

proxy_pass "http://apaches";

break;

}

}

location ~* \.(html(.gz)?|xml)$ {

add_header Cache-Control no-cache,no-store,must-validate;

root $myroot;

if (-f $request_filename) {

break;

}

if (!-f $request_filename) {

proxy_pass "http://apaches";

break;

}

}

location / {

access_log /var/log/nginx/localhost.proxy.log proxy;

proxy_pass "http://apaches";

}

}

Configuring glusterfs: (version 3.0.3)

There are two files. glusterfsd holds the local "brick". glusterfs holds the info on how to mount and use the bricks.

glusterfsd.vol

# Generated by Puppet

volume posix

type storage/posix

option directory ####

end-volume

volume locks

type features/locks

option mandatory-locks on

subvolumes posix

end-volume

volume iothreads

type performance/io-threads

option thread-count 16

subvolumes locks

end-volume

volume server-tcp

type protocol/server

subvolumes iothreads

option transport-type tcp

option auth.login.iothreads.allow ####

option auth.login.####.password ####

option transport.socket.listen-port 6996

option transport.socket.nodelay on

end-volumeglusterfs.vol

# Generated by Puppet

volume vol-0

type protocol/client

option transport-type tcp

option remote-host ####

option transport.socket.nodelay on

option remote-port 6996

option remote-subvolume iothreads

option username ####

option password ####

end-volume

... # 1 per apache node + 1 per nginx node

volume vol-3

type protocol/client

option transport-type tcp

option remote-host ####

option transport.socket.nodelay on

option remote-port 6996

option remote-subvolume iothreads

option username ####

option password ####

end-volume

volume mirror-0

type cluster/replicate

subvolumes vol-0 vol-1 vol-2 vol-3

option read-subvolume vol-0

end-volume

volume writebehind

type performance/write-behind

option cache-size 4MB

# option flush-behind on # olecam: increasing the performance of handling lots of small files

subvolumes mirror-0

end-volume

volume iothreads

type performance/io-threads

option thread-count 16 # default is 16

subvolumes writebehind

end-volume

volume iocache

type performance/io-cache

option cache-size 412MB

option cache-timeout 30

subvolumes iothreads

end-volume

volume statprefetch

type performance/stat-prefetch

subvolumes iocache

end-volume